|

Chassis: The exterior housing of the entire computer. The server can use a standard PC chassis or a server specific chassis, this could then be called the server's form factor. Types of chassis include:

Standard PC: No special server functional considerations.

A typical standard PC full tower chassis.

Rackmount: The objective is to be both compact, and conveniently located in close proximity to the main network connectivity devices also mounted to the rack. The additional consideration is that the rackmount server is mounted to the rack which is in close proximity to other servers (as many as 42 of them within 6.5 feet of each other) facilitating the construction of the server framework. Rackmount servers are mounted onto the telecommunications rack which is itself located within the telecommunications room which should be physically secured. Rackmount chassis fulfill: concurrent connections, server framework, and security server specific requirements.

Cabinet: The objective of the cabinet chassis is large volume which allows for large motherboard and CPU form factors as well as large numbers of internal components including hard drives. Many cabinet chassis are also rackmount adding the objectives of that chassis form factor including proximity to the network connectivity devices and the intention of being secured in the telecommunications room. The cabinet chassis can add its own physical security in the form of thicker metal outer shell with locks. Cabinet chassis fulfill: processing power, concurrent connections, server framework, and security server specific requirements.

Blade: The objective of the blade server form factor is an extension of the rackmount server objectives in that it is even more compact than the rackmount server and also achieves the objectives of being close to the network connectivity devices and locked away with them in the telecommunications room. In general a full "blade center" (rackmount chassis holding the individual blades) can fit about 150% to 180% more servers into the same amount of rack space. Blade chassis fulfill: concurrent connections, server framework, and security server specific requirements.

Custom: The objective of a custom server chassis is to fulfill the organization's particular and sometimes unique requirements which may include: requiring very large internal volume or added physical security measures meaning that they fulfill: processing power and security server specific requirements but are not limited to these solutions.

|

|

Power supply: The internal power supply of the PC-based server performs the conversion of standard wall AC current into the much lower DC current required by the digital electronic components of the computer. Internal power supplies must be able to provide enough power to the internal components which is measured in wattage. Server power supplies also come in various physical sizes and connector combinations suitable for the same connector equipped circuit boards and devices. This is called the power supply's form factor. Server power supplies are also made in fault tolerant solutions including multiple fail-over power supplies.

|

|

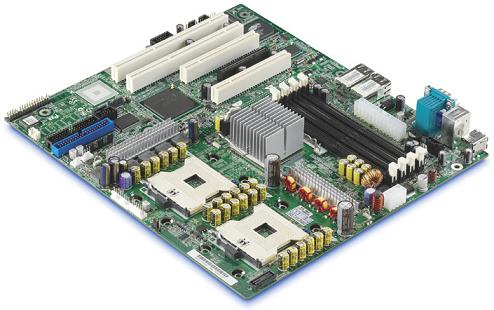

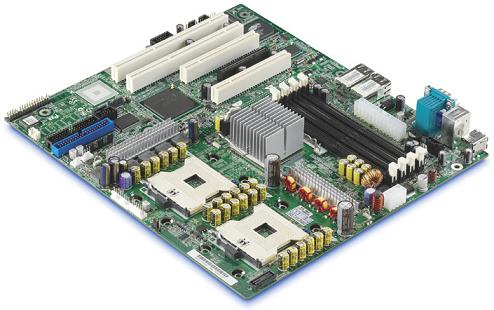

Motherboard: The main circuit board of the computer to which the CPU(s) and RAM and expansion cards are physically mounted. Because of this, the motherboard determines the number of and types of supported processors, the amount, type, and speed of supported RAM, and the number of and bus types of supported expansion cards in particular. The motherboard therefore directly determines the overall processing power and performance of the computer. This starts with the choice of the chipset but it should be noted that different motherboard manufacturers can implement the same chipset differently which will also affect the performance of the computer. motherboards can also include their own set of integrated peripherals. By including these already on the motherboard, this frees expansion slots for use by other user selected expansion cards. In general, if the server chassis has the room (i.e. it is not a rackmount or a blade) the motherboard should have a few integrated peripherals as possible, in other words all peripherals should be expansion cards which makes them easy to replace should they fail. Motherboards expansion buses and numbers of slots available are an important consideration; while PCI is sufficient for many devices such as a 1000BaseTX interface card, it should be noted that this card has a throughput of 125MB/sec. The entire PCI buse has a maximum throughput of 133MB/sec. This means that attaching one more device to the bus, such as an ATA133 controller will exceed the throughput of the bus; the bus will not be able to read the hard drive at 133MB/sec and transmit the data out of the 1000BaseTX card at the same time Either the motherboard will need multiple PCI buses or a superior bus such as PCI-Express. Motherboards can resolve: processing power, performance, concurrent connections, server framework, availability, and security server specific requirements.

A typical server class motherboard with dual Socket 603 support for two Intel Xeon server class processors.

|

|

CPU: This is the one component that in the PC industry is superior to the CPU's specifically made for other classes of general purpose computer such as the minicomputer or mainframe. This is because of the enormous economy that the PC industry represents which has funneled billions of dollars to Intel and AMD. Because of their head-to-head competition for market share, these two companies have reinvested billions of dollars into research and development of their products making the PC industry processors the most powerful single chip computers in the world. However, it is the number of them supported by the PC industry chipsets and motherboards that is the problem. In general most chipsets and motherboards can support a maximum of four processors. Any more than that and the chipset and the motherboard must be custom designed and manufactured which carries a huge "tooling up" cost for the manufacturer (read: millions). CPUs fulfill server specific processing power requirements measured by:

Number of Cores: Since servers must handle potentially massive processing workloads, the number of cores in the machine are the single greatest factor contributing to the servers ability to multitask. A single 3.9GHz CPU may outperform a quad 2.0GHz CPU based system in executing one process at a time. But the four processor based system will embarrass the single CPU system once dozens of processes are launched simultaneously which is exactly what servers do for a living.

FSB Throughput: All of the core speed and processing power on Earth is useless if the CPU can not get the information from the outside world fast enough to keep its computing engine running at core speed constantly and it is equally useless if it can compute vast amounts of information that cannot get back out of it to the outside world again. The FSB throughput is a direct measurement of this capability. It should be noted that in multiprocessor based motherboards there is another issue to consider: while each CPU may have a 1066MHz FSB, can the chipset manage this DTR for all of the CPU's at the same time? This capacity is called the motherboard chipset's total data throughput fabric.

Cache: No modern CPU could possibly remain at a core speed often multiplied a dozen times faster than the FSB if it had no core speed cache to fetch the data from. Furthermore, it would still be useless, if the cache manager, the MMU - Memory Management Unit could not keep it filled which is itself part of the core architecture of the CPU. The measurement of cache in order of priority is: amount, speed, and levels, In the end, the more cache, the better. But cache comes at a premium, otherwise the entire RAM space would simply be made out of high speed cache and the problem of managing it would not exist. Cache also runs at different speeds and is so split and managed as levels. With the Intel Family 15, Intel introduced a third level, L3, of cache meaning that there are three discrete speeds of cache in these systems. In general L1 - Level 1 cache resides onboard the CPU and runs at core speed. The only time the CPU reaches around it, it when it does not hold the next instruction or data that the core needs. L2 - Level 2 cache was originally external to the CPU core running at FSB speed, however beginning with the Intel P6 Family (Pentium Pro, II and III) L2 cache was sometimes built into the CPU and could run at full core speed, a fraction of core speed or at FSB speed. L3 - Level 3 cache is in general external to the CPU core and runs at FSB speed.

Core Architecture: The actual internal design of the CPU itself determines its processing efficiency and speed. It is the core architecture that separates generations of processors, i.e. The Pentium III vs. The Pentium 4, or more accurately: Family P6 vs. Family 15. The core architecture of the processors is the cutting edge of CPU design and where the performance war between the engineers at Intel and AMD is constantly being fought. In general the later the generation the greater its computing efficiency which is directly related to its manufacturing resolution, the smaller the manufacturing process, i.e. 45nm vs. 65nm, the more transistors can fit into the same chip. The more transistors, the more intelligence the CPU is endowed with including such core architectural components as mutliple cores, multiple pipeline decoders, smarter MMU's, etc. Processors designed specifically as server products are certainly optimized for raw processing power starting with the core architecture.

|

|

RAM: Because the basic expectation of the server is raw processing power and the reliable maintenance of multiple concurrent connections, servers in general must have much more RAM than the average standalone PC. When evaluating RAM for the server, the total amount installed is the most important consideration. RAM and cache work together such that with ample cache, the MMU can stay ahead of the processor core(s) indefinitely as long as there is ample RAM holding all possible code that the core may require next. The speed of the RAM is not nearly as important as the fact that it is there at all and holds the required data. The MMU is moving the data out of the slower main RAM into the faster cache RAM where the speed of the cache can keep up with the core. But if the RAM simply does not exist, then the data cannot be loaded up off of the hard drive where it must stay, and when it is needed, it must be fetched up off of the hard drive which is thousands of times slower than even the slowest form of RAM. This is called virtual memory which is kept in the swap file and all modern 32-bit (and now 64-bit) operating systems must keep a swap file or else they would suddenly run out of RAM and crash if the system loads too many processes. It is the usage of the swap file that drags end user systems to a crawl. Servers cannot afford to do this and so must have vast amounts of RAM so that virtual memory usage is cut down to a minimum, although it should be noted that it is never completely eliminated. RAM can resolve performance and security server specific requirements. Factors in choosing RAM include:

Total Installed Amount: As stated, this is the most important factor in choosing RAM for the server. With modern multitasking operating systems serving large numbers of clients and/or concurrent connections it is possible that the server can never have enough RAM.

Single vs. Dual channel: Dual channel technology began in some Rambus based systems. Dual channel allows the memory controller to read/write to two independent memory modules simultaneously thus giving main RAM twice the speed or specifically twice the DTR. On motherboards in which the single vs. dual channel mode can be selected, dual channel runs twice as fast and does not sacrifice the total installed amount and if the prefered solution.

Speed/Mfg. Technology: Obviously some technologies are so slow that they may affect overall performance these include EDO and possibly SDRAM. In modern systems Rambus also known as RDRAM has been dropped in favor of the equally fast and far less expensive to manufacture DDR and now DDR2. A variation of GDDR3 simply called DDR3 is appearing on some systems and it should be noted that the JEDEC has already established standards for GDDR4 and GDDR5 which will make their ways into systems for use as regular main memory as well. Since almost all modern systems are using DDR or DDR2 it should be noted that these technologies come in various speeds. Given the choice, dual channel DDR may still not be as fast as single channel DDR2.

ECC vs. non-ECC: ECC - Error Correction Code RAM is highly robust in that random single bit errors which while rare, do occur often enough in heavy RAM requirement computing systems, can be automatically detected and corrected on the fly. This prevents the data from being corrupted or worse crashing the operating system. ECC is by definition a data integrity solution fulfilling both security and availability server specific requirements.

non-Registered vs. Registered vs. Fully Buffered: Because registered and fully buffered RAM modules behave differently at the electronic circuit level, motherboard chipset north bridges can support larger total installed amounts of RAM when these varieities of RAM modules are used rather than the normal end user "non-registered" or unbuffered modules are used. These modules are more expensive to manufacture and registered or fully buffered RAM modules are usually combined with ECC capability which is also more expansive to manufacture; this makes "registered ECC" and "fully buffered ECC" modules significantly more expensive than their plain but otherwise equivalent counterparts. Registered and fully buffered RAM modules fulfill the performance server specific hardware requirement.

|

|

Storage Controllers: Servers are normally expected to have far superior

data storage capacity, performance and integrity requirements than end user based PC's. Some servers, depending on the specific role or mission requirements can function adequately with standard ATA/SATA or SCSI controllers, but if the server has large data capacity or high performance data storage requirements, then these controllers will fall short of expectations. While standard controllers will all support software level RAID's, these are not as fast or as reliable as hardware level RAID's that are managed by the onboard controller circuitry itself. Storage controllers can resolve performance, security and availability server specific requirements. Factors include:

Interface DTR: SATA-II (SATA-300) supports 300MB/sec DTR (Data Transfer Rate) from the device's buffer to the host controller itself (and vice-versa) but the host controller's fabric, based on the total number of actual channels will affect the overall performance. SCSI Ultra320 is comparable in performance but considered far more stable when there are more than a few hard drives. fiberChannel SCSI supports DTR's of 1GB/sec, 2GB/sec and now there is a 4GB/sec technology available for the PC. Note: a 4GB/sec fiberChannel SCSI host controller would have to be attached to the system via PCI-Express x16 video card slot or better.

An Adaptec Ultra 320 SCSI RAID Controller for PCI-X (64-bit/133MHz)

Maximum Drive Size Supported: This should not be an issue on new controllers, SATA and SCSI all support 48-bit LBA which can access drives up to 144PB in size (131,072TB). Older parallel ATA and SCSI host controllers may have the classic 28-bit LBA Limit of 136.9GB Maximum hard drive capacity and should not be considered.

Maximum Number of Drives Supported: The upper limit of the total number of hard drives supported by a SATA controller is determined by the number of attachment ports on the card. SCSI U320 natively supports 15 devices (they can all be hard drives) per channel connector. SCSI also supports other devices while SATA is fairly well limited to internal hard drives and optical drives. SCSI natively supports external devices while eSATA is a recent addendum to SATA-II with limitations in cable length and number of devices supported (i.e. none for existing controllers that do not have the expansion port) fiberChannel SCSI systems using "iSCSI" device ID technology can support a maximum of over 4 billion devices that can be hundreds of meters from the controller.

Integrated RAID Support: SATA RAID controllers have become available in motehrboard south bridge chipsets for end user products, but SCSI RAID controllers have been around for years. One of the key factors in considering a RAID controller is the amount of onboard cache since it will need it in order to make the calculations required for splitting/reunifying data going out to and coming in from the RAID hard drives. Forms of RAID supported is also important and will be covered in detail in the RAID lecture.

|

|

Storage Devices - Hard Drives: Server storage capabilities are in general far more rigorous and demanding than the standard PC. The hard drives themselves are just as important as the controllers when designing the storage capabilities of the server. Server storage capabilities address maximum data capacity, security and availability, yet having moving parts, the hard drive is at once the slowest and the least reliable data container in the entire server physical platform. There is no practical method of predicting failure of the hard drive despite such efforts as S.M.A.R.T. - Self Monitoring, Analysis and Reporting Technology. The only technology albeit quite robust, that can address the issue of dubious hard drive reliability is RAID either software or hardward level or both in a composite schema. Any server claiming even basic availability levels will have to employ RAID technology in some form to overcome the inherent unreliability of the hard drives. Factors in choosing hard drives include:

Number of and Capacity: When total storage capacity is an issue, it should be noted that a single large capacity hard drive will be more economical than two or more totalling the same capacity. However, RAIDs are designed to continue to function despite the failure of one of the hard drives of the RAID group. In other words, in order to set up a RAID, it is understood that the system will employ two or more hard drives.

Performance: Hard drive performance is measured, or benchmarked based on average seek time and read/write data transfer rates. Both of these performance benchmarks are in turn influenced directly by RPM, interface bandwidth, and platter utilization. RPM affects the actual amount of data passing beneath the read/write heads of the platters per second. The higher the RPM, the faster the raw data transfer rate. Interface bandwidth (i.e. SATA-II which supports a DTR of 300MB/sec) sets the upper limit of the DTR between the hard drive buffer (onboard cache) and the host controller. It should be noted that the maximum interface bandwidth can only be achieved in short bursts as it is usually 10 to 20 or more times faster than the internal DTR of the actual read/write heads to the onboard cache (which as already stated is directly influenced by the RPM). Platter utilization is he most difficult performance factor to measure because the hard drive manufacturers do not publish it. The term means how much of the usable platter surfaces is actually used to store data. Because hard drives are CAV - Constant Angular Velocity devices using ZBR - Zone Bit Recording in which the data is stored as densely as possible on each track, the outermost tracks are physically longer and therefore hold more data than the innermost tracks. Since it takes the same amount of time, one revolution to be precise, to read either the outermost track (holding more data) or the innermost track (holding less data) then the raw platter-to-buffer DTR is higher (as much as three times higher) for outermost tracks versus innermost tracks of the typical 3.5" form factor hard drive. If the drive employs a single platter holding 250GB, then once filled, data near the end of the drive (on the innermost tracks) will have read/write transfer rates up to 3 times slower than data at the beginning of the drive. A 300GB hard drive using two platters only holds 150GB per platter, using only 60% of the platters leaving the inner 40% of the usable storage area of the platters unused. This means that even filling this drive, the data at the end of the drive will have a much higher read/write transfer rate than that of its single platter 250GB cousin. Within a given series of hard drives it is possible to extrapolate based on the numbers of platters used in each model, what the storage bit density is and to figure out roughly what percentage of each platter is actually being utilized. However, the better approach is to control platter utilization at the user end by partitioning the drive and leaving the partitions at the end of the drive unused.

Internal vs. External: It has been noted that external hard drives are a definite security risk as are open and accessible ports to which external drives can be attached. Because of this many modern servers do not support external hard drives or even have ports to which they can be attached. The notable exceptions would be for the purposes of backup/restore/disaster recovery and the quorum drive(s) which are shared by members of cluster servers. Servers that only support internal hard drives are fulfilling a security server requirement.

Fixed vs. Hotswappable: Hotswappable hard drives allow hard drives to be added or changed out when they fail without having to power down the server. As a result, hotswappable hard drives are a definite availability solution for servers. Insecure and accessible hotswappable hard drives however, definitely represent a potential security risk.

|

|

Storage Devices - Optical Drives: Optical drives represent one of the more significant physical security risks to the server in that they support removable media which is one of the two most common physical virus vectors and they are bootable, allowing the system to boot to an alternate operating system. Under this condition, the local server operating system is inactive and as such all of its data confidentiality security measures are down leaving all data on the system vulnerable to destruction or theft. Having said that, a locked or normally inaccessible optical drive can be useful for installing the operating system, additional role specific software, data/software for distribution to other servers or clients. In particular a DVD±R/±RW drive can serve as an adequate backup/restore/disaster recovery drive. Although this still might not justify a permanently installed optical drive compared with the security risk it represents.

|

|

Storage Devices - Tape Drives: Since security is a primary design consideration in servers, and data integrity is an integral part of security, it is crucial that all servers have a complete data backup system employed which includes considerations concerning the backup device, the backup media, the backup scheme, the backup schedule, backup media handling/storage, and backup testing in preparation for a real world disaster recovery situation. Tape drives are therefore an integral part of the server security requirements and factors involved in selecting them include:

Capacity: Modern backup tapes do have very large capacities which may make them a suitable choice for systems with enormous amounts of data to be backed up.

Technological Life Span: Many historical tape drive technologies came and went within very short periods of time stranding users of those transient companies products. It can be difficult to tell if a technology is destined to disapear quickly, but in general: industry standard techologies are usually long lived, tape drives that can use other manufacturers media are likely to be well supported into the future, companies that have been around for a long time and who still support their older technologies are a very good choice over new companies with no long standing track record in the field.

Proprietary system: Many tape backup drives and even the media for them are proprietary forcing the user to buy new media only from the manufacturer, fortunately most of these have disappeared from the market (see Technological Life Span above).

Generic system level drivers vs. proprietary backup software: Generic system level drivers allow the drive to be recognized by the operating system and allows any backup software to work with the drive. Proprietary software may suffer the same problems that proprietary hardware and media do (see Technological Life Span above)

Speed: Modern large capacity backup tapes may take a very long time to fill during a full system backup. While the backup itself can be left to run over night, if it fails, then an entire days worth of data has not been backed up and is at risk of total data loss. Large organizations are very willing to pay backup operators to stay on duty all night if necessary until the backup has successfully completed.

Reliability: Backup tapes have always been, for the most part, one of the least reliable storage media used in the PC. As such, the decision to use them must consider the problem of testing the backups for viability. And such testing must be part of the regular backup scheme that the organization employs. Certainly then the tape backup drive, due to the lack of the reliability of the media, is a definite security issue. However it is worth noting, that not backing up the server makes the server useless so the tape drive is a necessary evil. Also of note: tape drives are completely unbootable and so can be installed locally without fear of introducing this form of insidious security vulnerability. Being removable media they are still quite effective physical virus vectors though.

|

|

Network Connectivity Devices: Servers are expected to service multiple concurrent network connections between other server nodes as well as many often hundreds even thousands of client nodes. Because of this servers often need either multiple network connectivity devices or even special technology network connectivity devices or both. The Network Connectivity Devices employed on the server fulfill the servers concurrent connections and security requirements. Selection factors include:

Network Type: Ethernet, FastEthernet, GigEther, 802.11 wireless or combinations as needed. Subtypes include: 10BASE2 vs. 10BASET or 100BASETX vs. 100BASEFX, for example. Fiber Optic based technologies including the fiber optic forms of Ethernet (IEEE 802.3) offer extra physical security in that they are extremely difficult to cut and splice (security risk due to data line tapping), do not suffer from EMI/RFI at all (no security risk due to data integrity vulnerability), and support very high bandwidth over very long distances (superior server framework technologies).

An Allied Telesis 1000BaseSX fiber optic Gigabit Ethernet NIC for PCI-X (64-bit/133MHz)

Adapter features: Particular to the network connectivity device itself including expansion bus interface, onboard CPU, onboard EEPROM, onboard cache and the amount of it, networking specific features such as autosense (of the Ethernet frame type) or 802.1q (VLAN support) to name just two.

Single vs. Multiple adapters: Depending on the server's intended roles, more than one NIC may be needed. Multiple NIC's may also serve as integral components in high availability systems, such as the dedicated interconnectivity between cluster server physical nodes each of which also has another NIC that attaches to the client access network connectivity device(s).

|

|

I/O Devices: Servers sometimes do not even have I/O device ports, and if they do, as a security precaution they are behind a locked access panel. If and when I/O devices are needed, they are usually minimal, including a standard 102/104 key keyboard, 2 button mouse and adequate display capable of VGA mode at 800 x 600 pixel resolution.

|

|

External Peripherals: External peripherals, especially external storage devices present a potential security threat and often the ports are either not provided at all or they are disabled. However, servers have external peripherals normally not found on end-users systems. Yet they are an integral part of basic server design and implementation:

-

KVM Switch: KVM switches can be used to attach more than one server to a single Keyboard, Video display, Mouse combination. This is done for two primary reasons: the server is normally not used to such an extent that it makes no sense to dedicate these peripherals to it, and in the case where there are multiple servers in the same server room, it makes sense to invest in a single set of these external peripherals and it also reduces the amount of physical space occupied by them

-

Powerline Appliances: These include: Surge Protector, Line Conditioner, and UPS - Uninterruptable Power Supply UPS's in particular often have a data interface with the host system such that in the event of a power failure they can signal the system that this has occurred. The UPS software can then initiate a controlled shutdown rather than suffer a sudden power loss when the batteries wear out. Because the UPS can keep the system running albeit for a relatively short period of time, they do allow the system to keep running through the majority of common power company interruptions and are the cornerstone of any desired level of availability based system.

-

External storage: This includes SCSI drives, eSATA drives, USB drives and FireWire drives. Any cables, hubs, terminators and adapter connectors anticipated should be stored with the server as well.

|

Lecture Only

Lecture Only The basic server hardware design factors,

The basic server hardware design factors, All major categories of PC components,

All major categories of PC components, Server specific versions of standard PC components,

Server specific versions of standard PC components, Server specific components not found in the standard PC,

Server specific components not found in the standard PC, Evaluating and Comparing hardware components,

Evaluating and Comparing hardware components, Evaluating name brand servers vs. building custom servers,

Evaluating name brand servers vs. building custom servers, The Server physical framework hardware.

The Server physical framework hardware.